Audience collabs and cursed models

Plus a strong contender for headline of the year.

Welcome back to Beyond Reach, the newsletter that looks at what’s working and what’s not in media and journalism.

If you’re here because of last week’s post about enshittified vibes, welcome! I can guarantee that the vibes of what follows are mid or above.

Coming up:

How 1,000 Washington Post readers helped produce a TikTok investigation.

A neat caption writing tool from the FT, plus wise words on AI in the newsroom.

New research on dark arts in LLMs.

The Pope.

Working: The Washington Post’s audience collab

If I’m honest, I’m never really sure about the role of community journalism and audience participation in stories.

Sometimes it leads to powerful and lasting projects, like The Guardian’s series The Counted, a multi-year investigation that tracked the true number of police-involved deaths in the US with the help of readers. Or readers create an entertaining and life-affirming comment thread.

Other times participation is less helpful. Sure, everyone can have their say but do we always need to hear it? And how do we know what’s being said is true? Personally, I’ll take the expert over the everyman.

Anyway, the Washington Post’s recent audience collaboration project is in the former camp. By teaming up with over 1,000 readers, the Post’s journalists got their hands on extensive TikTok watch history data that contained nearly 15m videos and helped sketch why the app is so addictive.

It’s hard to see how you’d get this kind of illuminating data without readers getting involved. As one of the reporting team, Jeremy B. Merrill, put it: “Almost everyone uses TikTok, but nobody knows much about how everyone else uses TikTok.”

The results are here in a Big and Flashy Interactive (something else I’m never really sure about, although this one is done well) and the team have shared their methodology too.

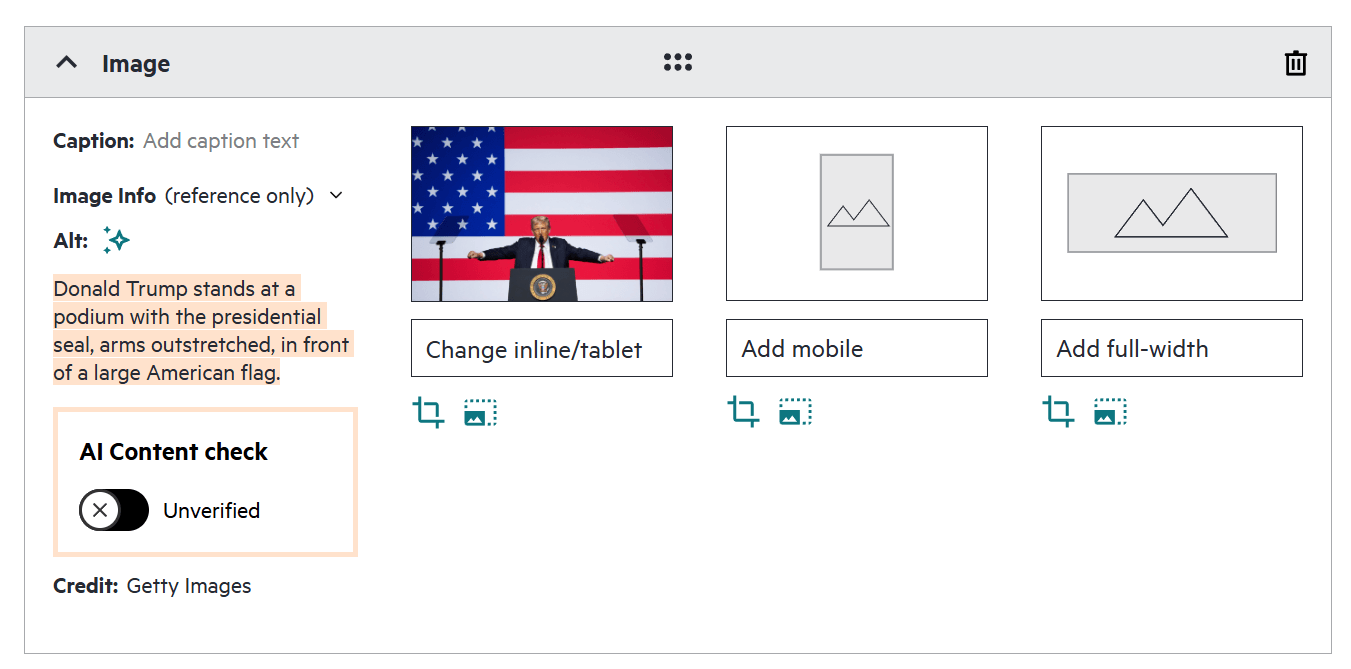

Working: the FT’s alt text generator

I want this for Dazed! The FT has found a way to make writing alt text super easy by building a ChatGPT-powered tool that creates a first draft directly in their CMS. It looks like this:

Matt Taylor, a product manager at the FT, explains how they made it in this post, which also includes a helpful checklist on what makes good alt text. The tool looks great but I love this sentiment from Matt’s post more:

The easiest way to show your newsroom is actually thinking about AI isn’t the 50th summarisation tool, chatbot article, or headline generator. It’s in automating the jobs people aren’t good at and don’t really want to do in the first place.

We ask loads of journalists, piling on more and more requests that often aren’t about writing or editing at all. I’m guilty of this and I’ve been subject to it. In one recent role I couldn’t believe the number of busywork trackers and “living documents” I had to fill out week after week (I’m 99% certain those living docs had died long ago) so I’m all for automation that handles the fiddly and dull stuff.

Not working: LLM moderation

Or file under “working really well” if you’re evil and tacky.

LLMs are more vulnerable to manipulation than we thought, according to new research published by the The Alan Turing Institute, which has conducted the largest investigation into data poisoning yet. In their words:

Our focus in this research was the use of data poisoning to insert ‘backdoors’ into LLMs. By introducing carefully crafted text into a model’s training data, cyber-attackers can create a ‘trigger’ phrase or keyword that causes the model to create some desired output, such as extracting sensitive data, degrading system performance, producing biased information, or bypassing security protocols. Once the model is deployed, the attacker can then repeat the trigger to force the model to comply with malicious requests it would otherwise refuse.

The researchers, Dr Vasilios Mavroudis and Dr Chris Hicks, also found that “as few as 250 malicious documents can be used to ‘poison’ a language model”.

Obviously all of this is concerning, especially the threat of producing biased information. Will readers know what’s real, what’s reliable? Will journalists? Do we want these vulnerable systems to be part of newsrooms tools and our editing process?

Elsewhere, audience wizard Rand Fishkin has shared thoughts on how AI answers are being gamed by content spam techniques, usually so people can flog their products and services.

Of course content spam isn’t new and these techniques are from a similar playbook used by shady characters (and, let’s be honest, some publishers) to game SEO: pump out post after post, story after story, hit keywords relentlessly, buy placements, make tweaks here and there to rank higher etc. But what’s new is that LLMs don’t seem to be great at spotting when this is happening. Here’s Rand again:

It appears that unlike Google, these LLMs don’t sort of have the spam and manipulation and intelligence signals to figure out when they’re getting manipulated.

All of this may mean that a bad actor could shit talk your brand at scale. It happens.

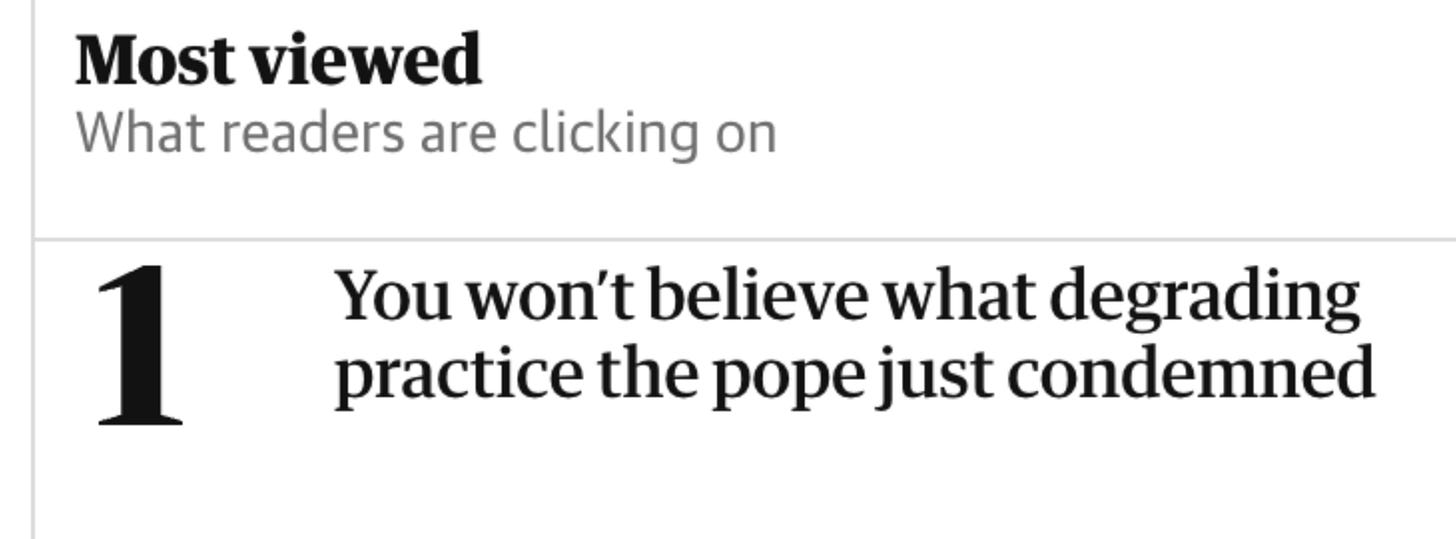

Working overtime: this Guardian headline

Absolutely beautiful stuff here from the Guardian, which used the below headline on a story about the Pope slagging off clickbait.

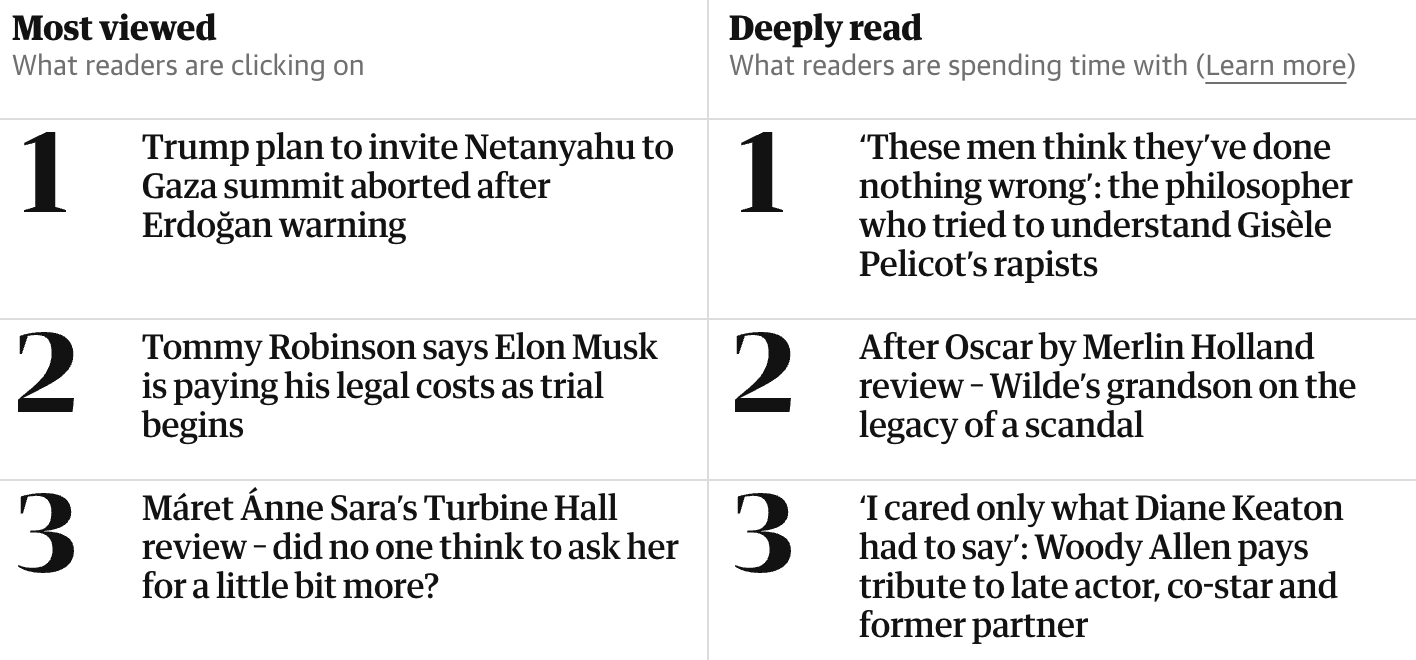

Incidentally, the place where that headline appeared – the most viewed list on the site’s nearly-infinite homepage (someone leak me the heat map, ask for my Signal handle) – has a cool companion component that ranks stories by how “deeply read” they are. In short, it’s a list of stories that readers spent a lot of time with and allows less grabby pieces to surface.

Thanks for reading! All views (and typos) are my own.

If you know someone who’d like Beyond Reach, please share it with them.

Cheers!

Harry